TL;DR: Create a web platform for computing live-updated impact estimates for different interventions, based on crowd-sourced estimates of assumptions and on explicit (simplified) theory-of-change causal models. Encourage founders, funders, skeptics and ordinary EAs to participate in these "markets".

Please tell me why you think this might not work!

Please tell me your ideas to make this even better!

Overview

Estimates on important quantities

Through wisdom-of-the-crowds (and ideally, actual prediction markets), we can get better estimates of numbers important for the EA movement. By improving the estimates of our assumptions, we can improve the effectiveness of the EA movement as a whole.

Formal theory-of-change models

By formalizing (and sharing) theory-of-change models for interventions, we make them easier to critique and explain.

If we use an actual (simple) programming language for these models, we can compute impact estimates in a standard way.

If we get the EA community to use a lot of these (and maybe weight people's predictions somehow?), then we get other benefits as well, such as the ability

In addition to the direct benefits of better estimates, encouraging people to put numbers on their conversations and ideas encourages epistemic virtue in the EA community.

Also, having a database of impact estimates of interventions, lets us easily compare across cause areas.

In detail, here's what that the platform would have:

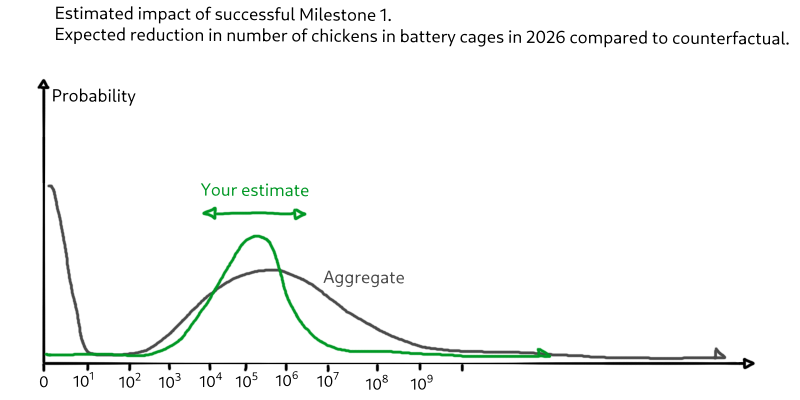

- The ability to create "estimates" on the site. All estimates allow bets to be posted as a critique/adjustment mechanism (market?). (Including the ability to input a probability distribution). Examples of kinds of numbers we would see are:

- "current number of chickens in battery farms"

- "expected QALYs of a future with a correctly aligned AI"

- "chance of AGI risk from

- "% reduction in 'AGI risk scenario B' given success of this intervention"

- A visual scripting language or simple programming environment for creating simple causal models that describe a theory of change. While creating the model, the user might also new estimates (like above), seeded with the creator's prediction.

- e.g. the creator would estimate "person-hours required to reach milestone 1 of this intervention", which would be posted on the site as a thing for other users to bet on.

- Allow comments to be posted attached to people estimates, to give feedback to the creators.

- e.g. "I'm placing this estimate for these reasons, and I'll change it after we discuss it. I think that your project requires many more person-hours than you have estimated to reach even your first milestone."

- Allow fixing the values of certain numbers and re-generating a visualization the resulting estimates of different interventions.

- e.g. "Here's a link to your intervention if we assume my estimate of the person-hours required to reach milestone 1. As we can see that kind of kills this as an intervention compared to just putting the person hours into <other scalable intervention here>"

- Have some way of sharing your reputation score, as determined by how the various aggregated guesses moved over time towards or away from your particular guesses.

- Sharing this score probably shouldn't be the default because it would be too tied up in people's self worth, but having a high score would be a good signal.

Fake money or real money?

My initial thought on this is to just let people make guesses on individual markets, but the lack of a currency or actual incentive might make some people skeptical that the aggregated results are meaningful.

However, there's a recent proposal that says it's legal to do prediction markets with real money, conditional on that money being donated to charity: "Predicting for Good: Charity Prediction Markets" (akrolsmir, harsimony)

Using real money has some benefits, ie. that it definitely matches what's happening with the actual spending of the EA movement. People doing their donations via the site allows others to explicitly hedge against your donations if they think that's better in terms of impact.

I'm confused about how to make estimates using real money on random numbers like "% reduction in 'AGI risk scenario B' given success of this intervention"

What's required for an MVP?

The core valuable project is three things:

- A web interface for creating and estimating on numbers/assumptions.

- For some kinds of estimates, the interface needs to support 2D distributions.

- E.g. Probability of smarter-than-human AGI each year has 3 axes - chance, probability weight, and time. The distribution is a 2D surface in 3D.

- A intuitive (visual?) programming tool for creating causal models (probabilistic programs) using those numbers.

- A back-end for keeping the results up-to-date. This involves aggregating the guesses, and re-deriving the resulting facts by running the causal programs through a probabilistic programming engine (e.g. something that does markov chain monte carlo)

- Care needs to be taken to manage "time" in this system in order for results to be as live as possible but also correct.

In my experience of web development, this would probably take about one person-year to get a prototype to the point where it's both complete and pleasant enough for others to use. (Wouldn't it be nice if you could disagree on that number right here and now?! )

Extensions to this project:

- Native apps for good mobile experience.

- Easy sharing the estimates on certain numbers. (either live or "as of now")

- Embed into websites and the EA Forum / LW / AF

- Use real money, and somehow sending it to charities in the optimal way based on the calculated impact estimates.

- Attach comments on bets to give more detailed feedback

- Prompt commentors (and voters on the comment) to update their bet if the issue is addressed.

- Maybe you're not allowed to comment or vote on a comment until you make a bet!

Ideas for why might this not work?

- Models for why interventions might or might not work could be too complicated to reasonably put into an explicit program.

- For example, they might require putting many hours in, or require a high programming skill.

- Or, they might require sampling from complex probability distributions that makes it too hard to use a probabilistic programming language for this purpose.

- Even if the aggregation works, and the site is intuitive, people might not use it.

- For example, people might not want to be critiqued in this way.

- It might take too much work to do.

Extra content post-edit

Here's a list of links and people I have found on this topic, sneaked in before I hopefully get to 25 upvotes and get onto the Nonlinear library feed.

- Paal Kvarberg has scoped out a similar idea at the start of 2021 and got feedback in this document, but I think he didn't pursue the idea.

- Ozzie Gooen got funding from LTFF in 2019 and is now involved in foretold.io, an open-source prediction market. This looks similar to, but less ambitious than, what I'm trying to do. The notebooks part of their platform in particular doesn't look like it succeeded in getting adoption. He also previously started guesstimate, a probabilistic spreadsheet app which can do stuff like compute drake's equation with probability distributions (linked). He also has a collection on LessWrong of posts, on the topic "Prediction-driven collaborative reasoning systems"

- These people from Manifold Market are building "charity prediction markets". Charity prediction markets allow the use of real money in a prediction market, because the money will be donated to charity.

- The Quantified Uncertainty Research Institute (QURI, pronounced "query"), which have a variety of projects (which you can see on their public AirTable), including foretold.io (mentioned before), and a probabilistic programming language called Squiggle. (all linked)

- QURI's current active project is Metaforecast, a site that collects and links to predictions and estimates from other platforms such as Metaculus.

The main concern from all of these efforts is that it's hard to get people to use this platform. I think that yes,it needs to be really good to get any adoption.

Final words

I've just come up with this idea. Please comment below if it's bad, or especially if someone's already doing it and I can just join them.

Here's a list of links and people I have found on this topic:

Metaculus is also currently working on a similar idea (causal graphs). Here are some more people who are thinking or working on related ideas, (who might also appreciate your post): Adam Binks, David Manheim and Arieh Englander (see their MTAIR project).

Yeah I think Metaculus would be the best people to go talk about this. I know Gaia (Metaculus's CEO) is super excited about causal graphs; happy to intro you two if you'd like!

Also: Max, what's your background? Do you have the capacity to do direct work on this (ie build this out on your own?) If so, what are your bottlenecks (eg a year of funding for this project?)

My background is as a software developer, with professional web-dev experience. I'm currently doing a research master's on ML (transformers) and last year did a project surveying the field of probabilistic programming languages.

Because of my Master's, I don't have capacity to work on this right at the moment, but come September this year it's absolutely a candidate for things I would work on. I do have the skills and will have capacity to work on this on my own if I think that it's my best option for impact. From September onward, I have two bottlenecks: 1) Funding 2) Finding the best use of my time among many options.

FWIW we'd love something like this in Manifold too, but that's probably a bit farther out; Metaculus is much better developed in terms of complex in-depth estimation/forecasting, while Manifold is trying to focus on being as simple as possible.

Happy to see more discussion on these topics.

Much of this is a part of what both some of the EA forecasting community, and what we at [QURI](https://quantifieduncertainty.org/), are working on.

I think the full thing is much more work than you think it is. I suggest trying to take one subpart of this problem and doing it very well, instead of taking the entire thing on at once.

I've been thinking about which sub-parts to tackle, but I think that the project just isn't very valuable until it has all three of:

It's a lot of work, yes, but that doesn't mean it can't happen. I'm not sure there's a better way to split it up and still have it be valuable. I think the MVP for this project is a pretty high bar.

Ways to split it up:

These things empower each other.

It's hard, but nevertheless I'd estimate definitely no more than 3 person-years of effort for the following things:

What do you estimate?

I'd love to make an aggregate estimate for how much work this project would take

I think this idea is really cool (albeit hard to pull off successfully)! You're definitely not the first person to think of it, but I don't know of any comparable efforts that have turned into actual products yet. I think the biggest challenge will be maintaining the right ratio of models to estimators, as you could very easily have the former outrun the latter without some kind of subsidy for people's time. There's already a challenge around recruiting and retaining talented forecasters, and this sort of estimation may be in some ways more cognitively demanding / less rewarding for the participants. So you might want to have a setup where the impact models are tightly curated and there are incentives to attract estimators, at least at the beginning.

If you end up moving forward with a prototype, I'd be interested in providing input on the product design as an alpha user.

Actually, come to think of it, the S-Process used for the Survival and Flourishing Fund is an implementation of one version of this idea.

Thanks, that's good feedback. I will check out the linked video. If you know anyone to get in touch with, I'd be keen to talk to them.

My guess is that this idea has been independently thought about many times, and if it's not bad for some reason, already funded.

I've found a similar project by Paal Kvarberg, described in this document here: https://docs.google.com/document/d/1An-NGrQUPSJ4v8HdsZO-BcAq5NpyrEqv32rdk-N4guo/edit#

I think he didn't pursue it - the document hasn't been updated for a year.

Seems like I forgot to change "last updated 04.january 2021" to "last updated 04. january 2022" when I made changes in january haha.

I am still working on this. I agree with Ozzie's comment below that doing a small part of this well is the best way to make progress. We are currently looking at the UX part of things. As I describe under this heading in the doc, I don't think it is feasible to expect many non-expert forecasters to enter a platform to give their credences on claims. And the expert forecasters are, as Ian mentions below, in short supply. Therefore, we are trying to make it easier to give credences on issues while reading about them the same place you read about them. I tested this idea out in a small experiment this fall (with google docs), and it does seem like motivated people who would not enter prediction platforms to forecast issues might give their takes if elicited this way. Right now we are investigating this idea further through an mvp of a browser extension that lets users give credences on claims found in texts on the web. We will experiment some more with this during the fall. A more tractable version of the long doc is likely to appear at the forum at some point.

I'm not wedded to the concrete ideas presented in the doc, I just happen to think they are good ways to move closer to the grand vision. I'd be happy to help any project moving in that direction:)

I'm in favour of the project, but here's a consideration against: Making people in the community more confident about what the community thinks about a subject, can be potentially harmfwl.

Testimonial evidence is the stuff you get purely because you trust another reasoner (Aumann-agreement fashion), and technical evidence is everything else (observation, math, argument).

Making people more aware of testimonial evidence will also make them more likely to update on it, if they're good Bayesians. But this also reduces the relative influence that technical evidence has on their beliefs. So although you are potentially increasing the accuracy of each member's beliefs, you are also weakening the link community opinion has to technical evidence, and that leaves us more prone to information cascades and slower to update on new discoveries/arguments.

But this is mainly a problem for uniform communities where everyone assigns the same amount of trust to everyone else. If, on the other hand, we have "thought leaders" (highly trusted researchers who severely distrust others' opinions and stubbornly refuse to update on anything other than technical evidence), then their technical-evidence grounded beliefs can filter through to the rest of the community, and we get the best of both worlds.

One of the approaches here, is to A) require people sign up, and B) don't show people aggregated predictions until they have posted their own.

I think that’s a good idea to reduce groupthink! Also, I think it can be helpful to uncover if specific individuals and sub-groups think a proposal is promising based on their estimates, since rarely will an entire group view something similarly. This could bring individuals together to further discuss and potentially support/execute the idea.

Thanks for writing up this idea in such a succinct and forceful way. I think the idea is good, and would like to help any way I can. However, I would encourage thinking a lot about the first part "If we get the EA community to use a lot of these", which I think might be the hardest part.

I think that there are many ways to do something like this, and that it's worth thinking very carefully about details before starting to build. The idea is old, and there is a big graveyard of projects aiming for the same goal. That being said, I think a project of this sort has amazing upsides. There are many smart people working on this idea, or very similar ideas right now, and I am confident that something like this is going to happen at some point.

I think this is an excellent idea and one that I’ve wanted to exist for quite a few years now! My interest in this area stems from wanting to surface compelling strategies and projects that don’t receive sufficient attention because they’re not currently “trending” in the movement. By explicitly laying out theories of change and crowdsourcing estimates for each part of those theories of change, this makes it much easier for people to to understand proposals, identify how various people and groups in the community think about a proposal, and compare the expected impact of various strategies and projects against each other.

Right now, people submit ideas and projects on the EA Forum, but that doesn’t clearly translate to action. But if it’s pretty clear that specific individuals, sub-groups in the community, or the community at large think a theory of change is promising, I think this has the potential to greatly increase awareness and the likelihood of execution of promising proposals.